In Intelligent User Interface (IUI) group, we research on user interface where human-data interaction occurs is conducted. Various devices ranging from smartphones and tablets to wearable devices and VR are used in the lab. Also, research on analysis of sensor information such as touch information and hand-written data, and VR technology is done for application to education as well.

Research Introduction

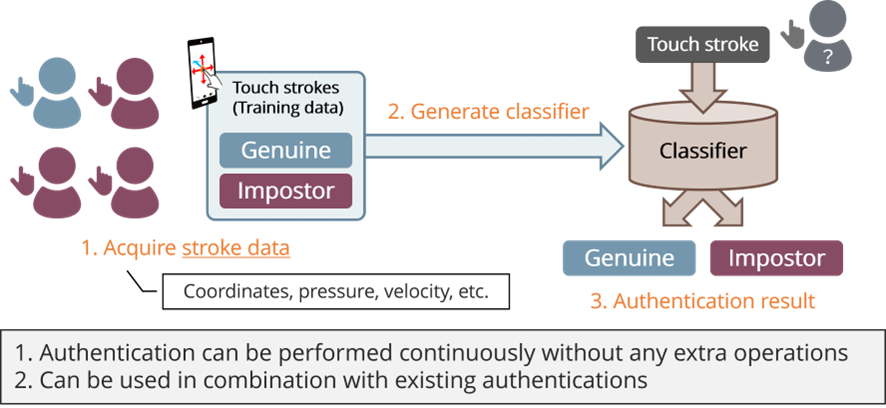

Stroke-based authentication on smartphone

Existing smartphone’s initial-login authentications such as patterns, fingerprints, and face ID contain some risks to be hacked by others. To overcome these risks, continuous authentication by touch strokes can be effective.

In this study, we aim to improve authentication accuracy and robustness against others in a stroke-based authentication. And We also try to build an authentication system with high imitation-resistance and automatically generate training data for actual operation.

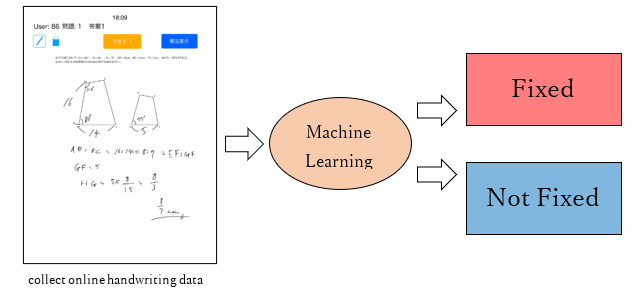

Retention Estimation using Online Handwriting Data

In recent years, the use of learning applications using tablet terminals has spread rapidly. In learning, it is important to provide questions that match the retention information of each individual learner, but it is difficult to accurately determine retention or non-retention based on correct or incorrect information alone. In this study, we use features extracted from online handwriting data (time series data of writing coordinates, writing pressure, etc.) to estimate whether the respondent understands the question or not by machine learning.

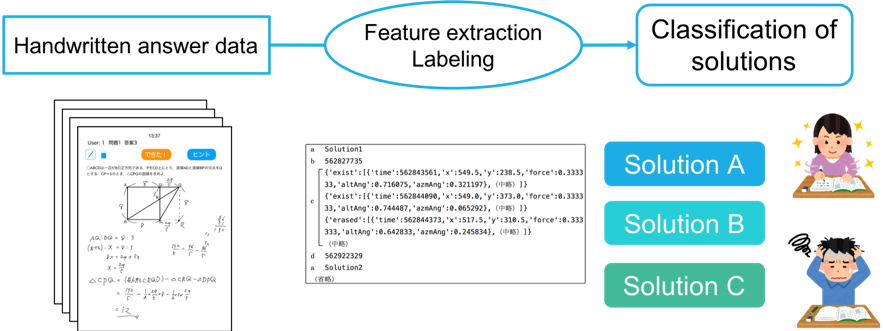

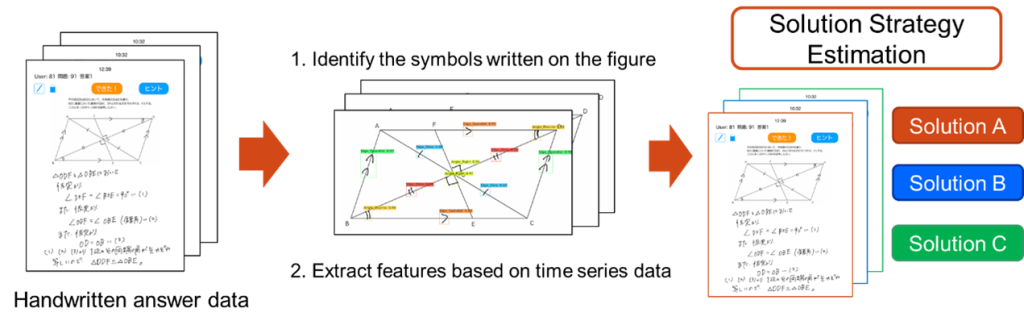

Automatic Estimation of Solution Strategies using Online Answer Data

In this research, we estimate a learner’s thinking process by automatically recognizing what is written on shapes.

We use image processing to automatically recognize symbols on geometric figures from handwritten answer data and analyze time-series data to identify the solution method used by the learner. This research aims to clarify the thinking process of individual learners.

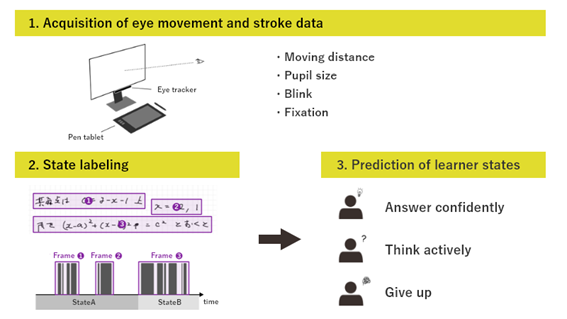

Automatic Estimation of the Presence of Answer Strategies while Solving Mathematical Problems using Eye Movements

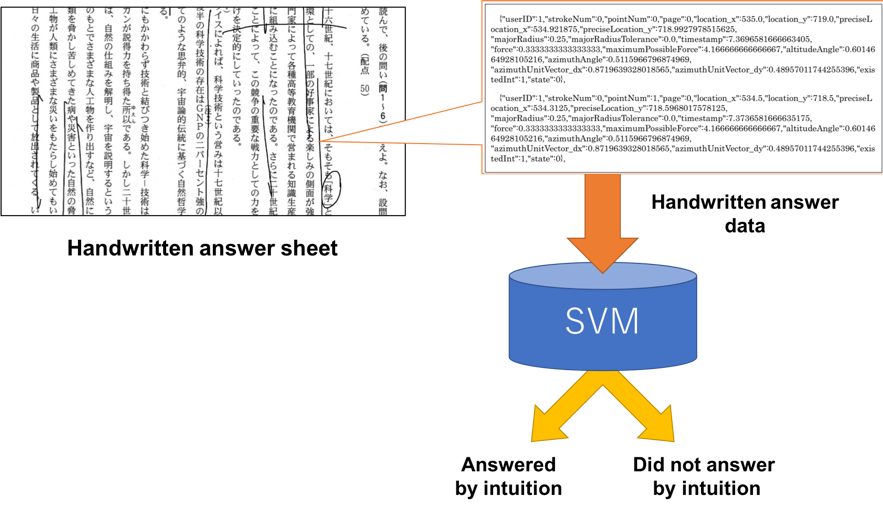

Recently, online learning has been spreading rapidly, and there is a need to provide an optimal learning environment to each learner. One of the methods for providing such adaptive learning environments is the use of eye movement data. In this study, we use eye movement data from an eye tracker and handwriting data from a pen tablet to capture changes in the respondent’s state while solving mathematical problems, and to determine whether the respondent is solving the problems with a solution strategy.

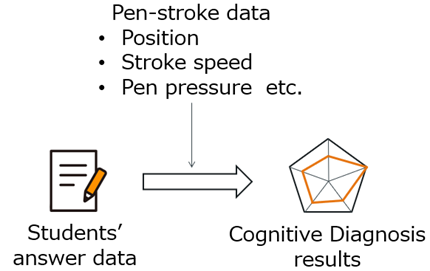

Cognitive Diagnosis of Mathematical Skills using Pen-Stroke Data

In recent years, with the widespread use of ICT devices in educational settings, there has been growing attention on providing personalized education to each learner. One approach to achieving this personalized education is “Cognitive Diagnosis,” which aims to identify learners’ strengths and weaknesses. This study focuses on improving the accuracy of cognitive diagnosis models by utilizing pen-stroke data obtained from ICT devices, such as stroke speed and pen pressure as time-series data.

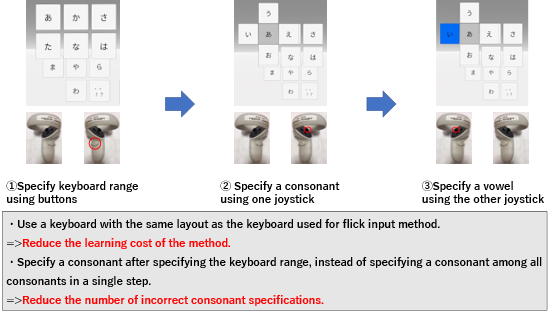

Text Entry Method Combining Joystick Operation and Button Operation in VR Figure

In recent years, Virtual Reality (VR) is expected to be applied in various fields, and efficient text entry methods are essential for using VR in various fields.

In this study, we propose a new text entry method that uses the keyboard with the same layout as that used in the conventional flick input method in the real world and a combination of joystick and button operations to specify input characters. Our proposed method aims to realize low learning costs and a small error rate.

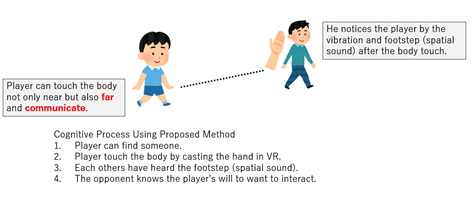

UI Design that Players Can Recognize Each Other’s Presence for Social VR

Social VR allows players to interact with each other in VR room. However, it is difficult for players to recognize each other and to interact with others. In this study, we focus on body touch as a way to make players aware of each other’s presence, and propose an “interaction-friendly UI” that allows players to body touch from a distance. Our aim is to make it possible for players to feel each other’s presence and to communicate their intention to interact.

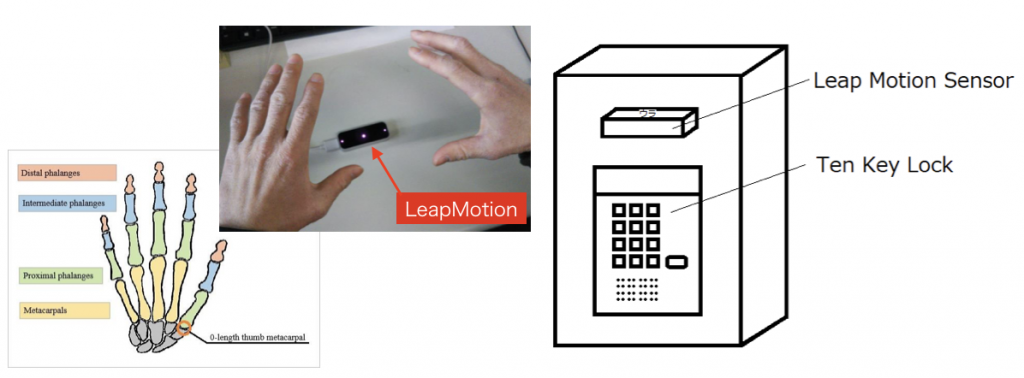

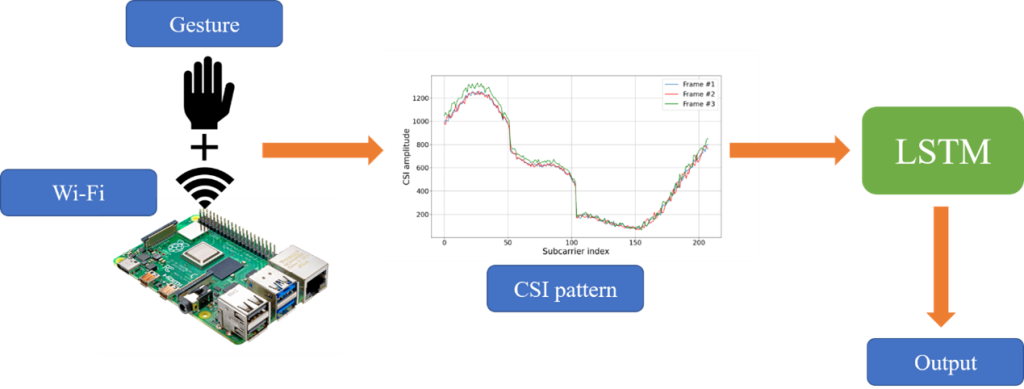

Environment-Independent Gesture Recognition based on Wi-Fi CSI with Raspberry Pi

Traditional interaction ways like touch and voice may not cover all needs with the growth of IoT today. As a new interaction medium, Wi-Fi signal-based gesture recognition solution is considered to be effective. This research focuses on applying the deep learning gesture recognition method with Channel State Information (CSI) with a low-cost Raspberry Pi. We also aim to overcome the environment-dependent issue in the previous research with our CSI preprocess methods.

Analysis of Handwritten Stroke Information in Japanese Long-passage Reading Problem

Classification of Solutions using Hand Written Data

By analyzing the handwritten data during learning, it is possible to provide optimum learning support for each learner in on-line learning where it is difficult to monitor the behavior of the learner.